Counterfactual Explanations for Anomaly Detection

RISE researchers have developed counterfactual reasoning methods that answer: What minimal change would make this data normal instead of anomalous? Making AI explanations human-readable and actionable.

AI-powered anomaly detection helps monitor complex systems, from industrial machinery to IoT networks, but operators need more than alerts; they need explanations to plan future actions. RISE researchers have developed counterfactual reasoning methods that answer:

What minimal change would make this data normal instead of anomalous?

The Approach

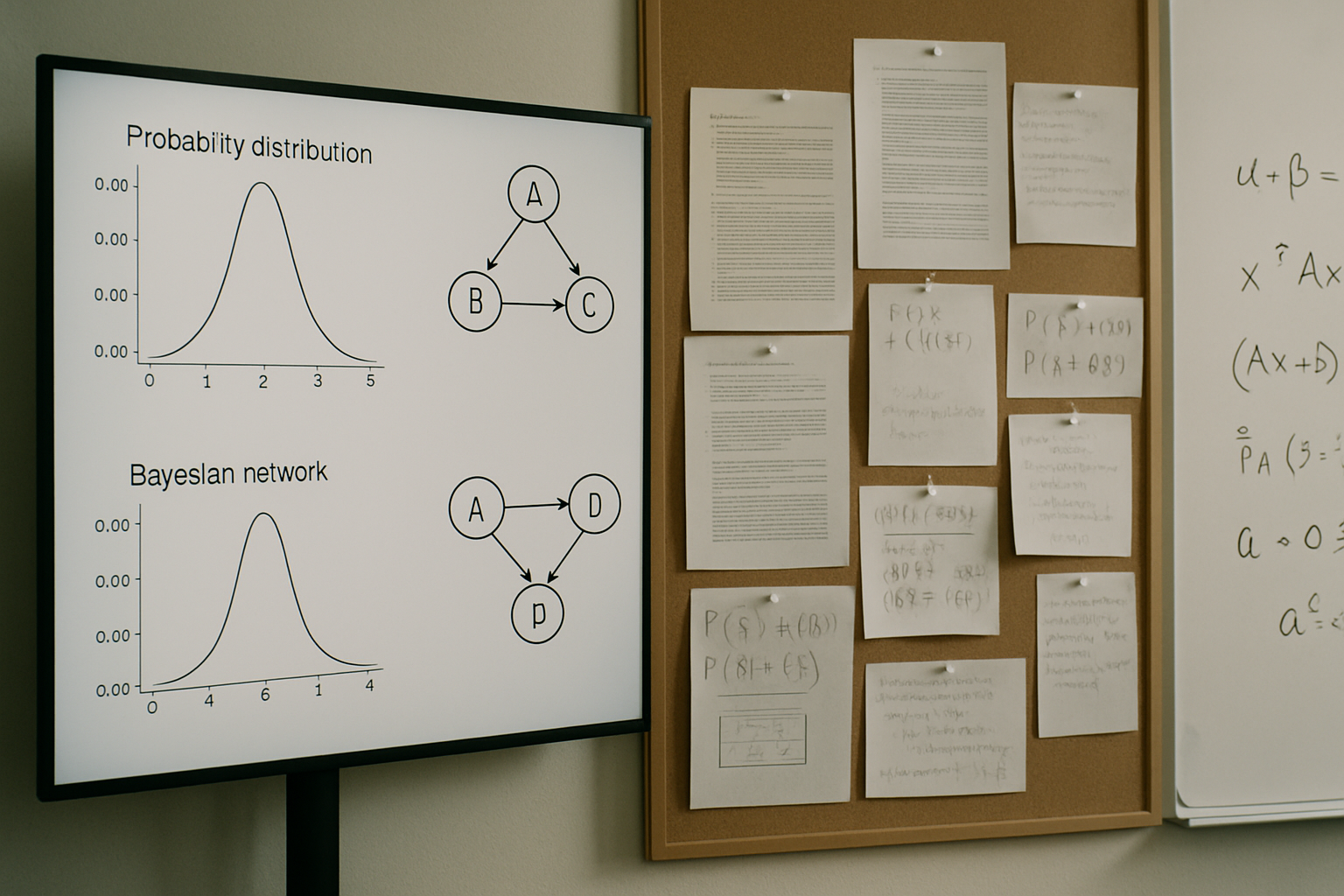

For differentiable models like autoencoders and graph neural networks, this involves selecting anomalous features and optimizing the selected feature values to generate realistic, interpretable alternatives.

Practical Applications

In practice, this approach pinpoints failing sensors in redundant systems and identifies early signs of mechanical degradation in vibration data from systems such as motors and compressors.

Impact

By making AI explanations more human-readable, this work bridges the gap between complex algorithms and actionable insights, ensuring AI supports, rather than replaces, expert decisions.